The seamless use of AWS Spot Instances for Apache Spark, Hive, and Presto has been a marquee feature of Qubole’s AWS offering since 2015. We have made consistent incremental progress in adding support for Spot Block reservations and Fleet Spot (by way of Heterogeneous Clusters), as well as the unique ability to dynamically replace on-demand instances with Spot instances.

However, innovation in cloud computing is endless. Recently AWS introduced the ability to notify applications of Spot Instance termination in advance. This allows us to further increase the stability of QDS clusters configured with Spot instances. In this post, we detail some of the work we have done in this area over the course of the last year. This post is specific to YARN-based clusters in Qubole (i.e. Hadoop, Hive, Spark clusters), and we will be following up with Presto-specific blog posts.

Challenges with Spot Instances

Spot instances can be terminated by AWS at any point in time (with notification two minutes prior). As a result, clusters that use Spot instances may be subject to the following problems:

- Data Loss: If all replicas of a particular HDFS block are stored on Spot nodes and these nodes become part of a Spot loss, we will not be able to recover those blocks.

- Running Job Failures: Task or executor failures (in Spark) are recoverable to some extent because of speculation and retries. However, after a point, even this can cause job failures. If an ApplicationMaster (AM) is running on the Spot node and that goes away, we won’t be able to recover the job and the job will fail.

- Job Submission Failures: In the job setup phase, job jars and conf files are copied to HDFS. Writes to HDFS may fail if lost Spot nodes are part of the HDFS write pipeline. This may lead to the failure of jobs while in the submission phase.

- Job Delays: Clusters may go well below the desired size and cause delays in running jobs and future jobs.

- Jobs Getting Stuck: ApplicationMaster might have some containers that were allocated on the nodes, which are now lost. AM will keep retrying to launch containers on those nodes for some time before asking for new containers. Similarly, reducers continue trying to fetch the output of Map tasks from lost nodes before Map tasks are retried.

In the next sections, we discuss how we tackle the above problems using a combination of pre-existing and new techniques based on Spot instance termination notifications.

Handling Spot Losses: The Basics

As previously mentioned, being able to use Spot instances reliably has been a core feature of Qubole — and many existing strategies are in place (before Spot instance termination notifications were available). These include:

- We have developed our own custom Block Placement Policy for HDFS, which ensures that at least one replica of all HDFS blocks is present on an on-demand node. This block placement policy protects against Spot loss by providing one replica on a stable node, which can then be replicated to a different node. This handles the problems with HDFS data loss.

- We have also modified the YARN scheduler to ensure that ApplicationMasters are never scheduled on Spot nodes (but can be run on Spot Block nodes). This avoids job failures caused by an AM being lost. Shell commands in Qubole are similarly scheduled since they are often responsible for launching other long-running tasks/jobs.

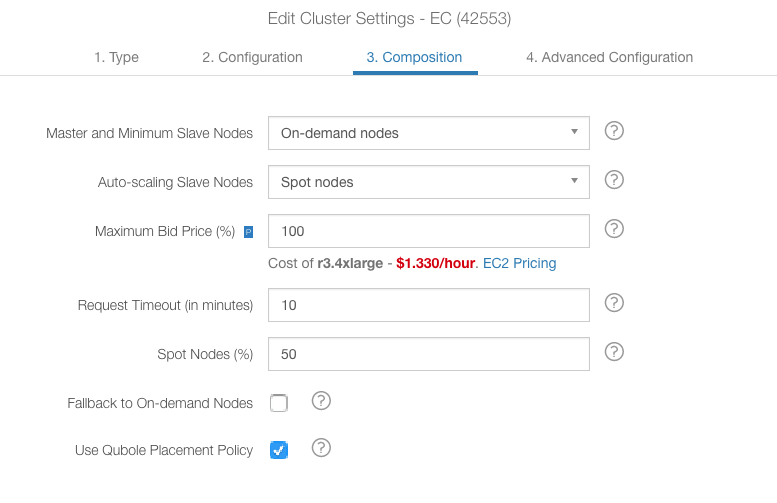

Finally, Qubole allows users to configure the desired percentage of Spot nodes for a cluster — and makes the effort to maintain that ratio as nodes are added/deleted/lost and as the Spot market availability varies. This balance of on-demand and Spot Nodes is required for reliable and efficient performance at a lower cost.

Using Spot Instance Termination Notifications in YARN

The Node Manager (NM) on every node in a Qubole YARN cluster keeps listening for Spot termination notifications and notifies the Resource Manager (RM) whenever it receives this notification. The Resource Manager moves these nodes in a new “To Be Lost” state. From here on:

- The RM does not schedule any new containers on them, though existing containers continue to run. This is the first step to reducing the impact of Spot losses on running tasks/executors.

- We also start HDFS decommissioning of these nodes to ensure that they do not become part of any HDFS write pipeline.

- Autoscaling logic considers these nodes as lost nodes from the point of view of the cluster and upscales the cluster in advance to prepare for this node loss.

- The Resource Manager also sends the list of nodes going to be lost to all ApplicationMasters. The application chooses to deal with this notification in its own way. We will see how Spark and Tez handle this in more detail below.

Spot Termination Notification Handling in Spark

Spark has a robust fault tolerance model to deal with various kinds of failures. Tasks failing to read files or having trouble with transient errors are transparently retried. Entire stages can be retried if a task fails many times. Executor loss is detected when the Spark driver does not receive timely heartbeats and is handled by retrying the lost tasks on different executors. With increasing Spot usage, executor failures are becoming more common. This could result in the Spark application missing its SLA. With spot loss information available to the Spark application, it can now use this information in the following ways:

- Avoid some recompute by not scheduling tasks on affected executors because these tasks are likely to fail

- Ongoing tasks are not preempted, thus allowing them to finish within two minutes if possible

When the Spark AM receives the spot loss notification from the RM, it passes it to the Spark driver. The driver then:

- Identifies all the executors affected by the upcoming node loss

- Moves all of the affected executors to a decommissioning state, and no new tasks are scheduled on these executors

- Waits for the next 120 seconds for the executors to finish the running tasks

- Kills these executors after 120 seconds; instead of waiting for heartbeat timeout from those executors, it proactively identifies executor loss

- Starts the failed tasks (if any) on other executors

Spot Loss Handling in Tez

Like Spark, Tez engine is also designed with fault tolerance as a fundamental feature. Tez tasks are deterministic and side-effect-free. Thus, the re-execution of tasks that fail due to node loss is generally successful, resulting in a successful run for the query. With the usage of Spot nodes, the chances of task failures increases. As explained above, this is unlikely to cause the queries to fail, but it can increase the execution time due to task retries.

Spot losses can cause two types of failures:

- Failure of the running task because of the node being unavailable/shut down during the execution phase.

- As with most of the engines, Tez writes map output of successful tasks to the disk where the map-task has been run. With the loss of this node, the reducer tasks depending on this map output will also fail.

We have modified Tez AM to receive the Spot-loss information and use this information to build a list of affected nodes. This list is used to avoid further scheduling of tasks to these nodes since it is very likely that they would fail. Handling the loss of map-output failures in such situations is on our future roadmap.

Making Users Aware of Spot Loss

Apart from letting applications know of Spot loss, Qubole also informs the end-user of such events. In the case of command slowdown or failures, users can quickly identify or eliminate at least one potential culprit. At present Qubole warns users if a Spot loss event has occurred on the cluster during the lifetime of the command. In the future, we will provide near-real-time updates on the UI when a Spot loss has occurred, and the user will be able to dig down into the individual applications that were affected — either because a running task on a Spot instance was killed, or because required shuffle data was lost and the task had to be retried.

In Summary

Spot instances are an integral feature of Qubole’s big data offerings — we have shown that customers can save up to 80% in EC2 bills running big data clusters on Qubole and that these features in part helped our customers save $140 million in AWS costs in 2017. However, this comes with the risk that Spot instances can be lost at any time. We have seen that around one to two percent of our cluster nodes is lost to Spot loss as opposed to regular termination due to downscaling. While this may seem like a trivial number in the larger scheme of things, it amounts to more than 1,300 nodes lost per day. Qubole’s handling of Spot loss notifications among other features enables users to gain significant cost savings while minimizing the risk of job slowdown and failure.

Check out our Workload-Aware Autoscaling white paper to learn more about Qubole’s autoscaling capabilities for big data workloads.