This post was written by Chris Chanyi, Senior Data Architect at TubeMogul. It originally appeared here.

TubeMogul handles over a trillion HTTP requests a month. To understand how we handle this amount of data, it’s important to understand how we started. Read on for an in-depth look at our big data history.

One of our recent blog posts detailed how we handle over A Trillion HTTP Requests a Month – a dizzying number. All of these HTTP requests mean that TubeMogul receives and stores some kind(s) of data for each request that needs to move through our data pipeline. While getting that data isn’t easy; storing, retrieving, querying, and aggregating all of this data is even more difficult. Thanks to a number of cloud services and improvements to our services, handling this data has gotten easier over the years. To understand how we handle this volume of data, it’s important to understand how we started…

Starting with Hadoop & AWS

In early 2008, TubeMogul was pivoting to an analytics company and was still processing data using an OLTP and Data Warehouse setup. That year, it was increasingly obvious a clustered and scalable solution was required and cloud computing was the answer. Yahoo had just moved its search service to Hadoop, an open-source implementation of the big data papers published by Google not long before. Hadoop was still new; that year they went from 3 featured companies on their website to 20 companies “powered by Hadoop” and TubeMogul got busy rebuilding their analytics platform using this new solution. The speed and scale at which TubeMogul finished this process were assisted heavily by our migration to AWS. Without the cloud migration, it’s difficult to imagine we would have succeeded.

Of course, those initial years were not without their challenges. AWS had had a few public outages that gave our Ops pause, but the adoption of Hadoop on AWS went as smoothly as we could have hoped considering the speed at which we deployed the service. We iterated fast and were able to gain the scale we needed quickly. Each spike in growth and each outage taught something new about handling large amounts of data (anyone who remembers the East Coast power outage of 2012 can sympathize: https://aws.amazon.com/message/67457 if you want to relive).

By early 2010, TubeMogul data growth using Hadoop, HBase, and Hive was massive. The ability to manage costs and simplicity in AWS grew as well. More tools began to appear and for a while, it felt like Amazon released a new major service every week. In the NoSQL world of AWS, the biggest was the emergence of Elastic MapReduce (EMR) web services. EMR required fewer operations support and allowed users to quickly spin up Hadoop clusters to perform anything from raw ETL to large Hive queries without the operational burden of managing the underlying hardware, OS, or software installation. TubeMogul picked up EMR and it became our go-to tool for Hadoop.

Stats Go Real-Time

At this time, we still processed all our stats data through a custom-built Hadoop-based platform. It wasn’t long before the demand for real-time updates and reduced reporting time necessitated a move to a real-time platform. TubeMogul replaced the Hadoop ETL pipeline with a Kafka and Storm-based system. (See our Real-Time Analytics blog post for more information on those services!) The same aggregations we ran in Hadoop now run in Storm in real-time, feeding our UI with metrics almost instantly after the event is consumed. The new platform also feeds a wide variety of new loaders performing constant streaming into our various end-points: Oursql (our custom-built low latency DB Engine), Vertica, S3, and now Druid as well. The last Hadoop cluster at TubeMogul was finally shut down, but our use of EMR continued. With our event-level data stored in S3, we performed many offline stats processes for debugging, reporting, and machine learning – all of this is still done in EMR. This worked well for a while, but cracks began to appear.

For starters, AWS charges by the hour so using one machine unit for 100 hours or 100 units for one hour are the same. Scaling massive clusters to finish time-sensitive queries became easy in EMR – you just spun larger clusters up and shut them down when you were done. That works great… until you forget to shut down the massive cluster for a week. Or when the AWS EMR API you are using is deprecated and the various tools you used for automated EMR management had to be upgraded as well (usually when you had the least time to do it too). Or when you found their Hive implementation a little old and slow, or you wanted a cool new feature in a new version, but it wasn’t available. Or worse yet, when the new version comes out finally and you accidentally choose it and it automatically upgrades your metadata store and corrupts every table. Yeah… that happened. This was about when Qubole came into the picture at TubeMogul.

TubeMogul first started using Qubole in 2013. It was meant to embrace the application cloud solution, provide everything EMR did, and more. Qubole had a simple-to-use UI, made it simple to start a cluster and run ad-hoc queries, and it had a much newer Hive version that was custom-tuned to run in the cloud and use S3 data more efficiently. (Qubole also contributes back to the community and is heavily involved in open-source Hadoop-based tools.) When we ran one-on-one comparisons, we found Qubole consistently outperformed EMR. By supporting our existing single sign-on SAML, we were able to connect our existing users to the Qubole environment. Adding new users was as simple as sending an invite email. Since our data was already in S3, we just had to create the clusters and recreate our external tables/views in the Qubole Hive meta-store. The clusters can be configured with a custom bootstrap for loading our existing custom UDFs, set specific Hive or Hadoop configuration settings, and configure private SSH keys to allow you to debug right on the hosts.

Early on, it was apparent that the ease of starting and spinning up clusters was a massive improvement over EMR. For one thing – they came up automatically once a query hit the cluster. A configurable idle time meant if it wasn’t being used, it was automatically shut down. This feature alone has probably saved us countless times.

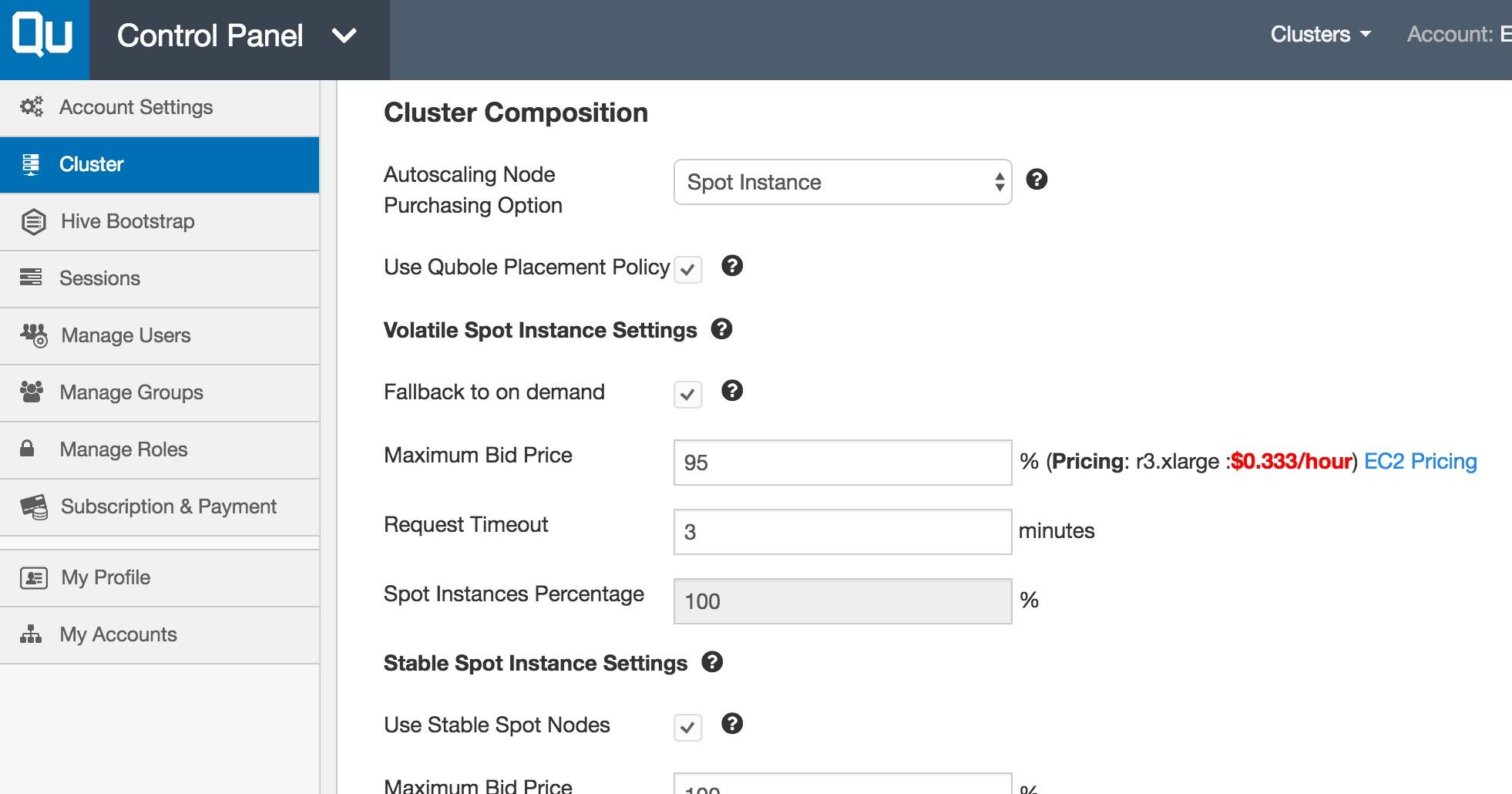

Another cost-saving feature that Qubole has is the use of AWS Spot instances. By configuring the cluster to use as many spot instances as desired, especially if you’re flexible in the cluster sizing or timing, you can make use of spare instances and save quite a bit over the on-demand pricing. With the wide variety of instance types to choose from, it was easy to find the right configuration for any cluster you may require. TubeMogul has 5 accounts with over a dozen clusters and it’s unlikely this arrangement could be managed in EMR.

Qubole also comes with a handy scheduler. A lot of our offline stats ETL jobs are built out using this scheduler. By processing data once it arrives, we can perform aggregations or conversions of data to be processed downstream, or even further aggregated or filtered for end-user consumption. The processes are easy to build and maintain. Even non-engineering groups have been able to add jobs to automate redundant tasks. As an added bonus, they also include an import/export tool. By adding taps to external sources, we can also integrate dimensional tables from MySQL and Vertica into S3 to perform joins in Hive. From QA to account management, to machine learning, TubeMogul runs many hundreds of ETL jobs and reports a day and many more ad hoc queries.

And Finally, Adding Spark and Presto

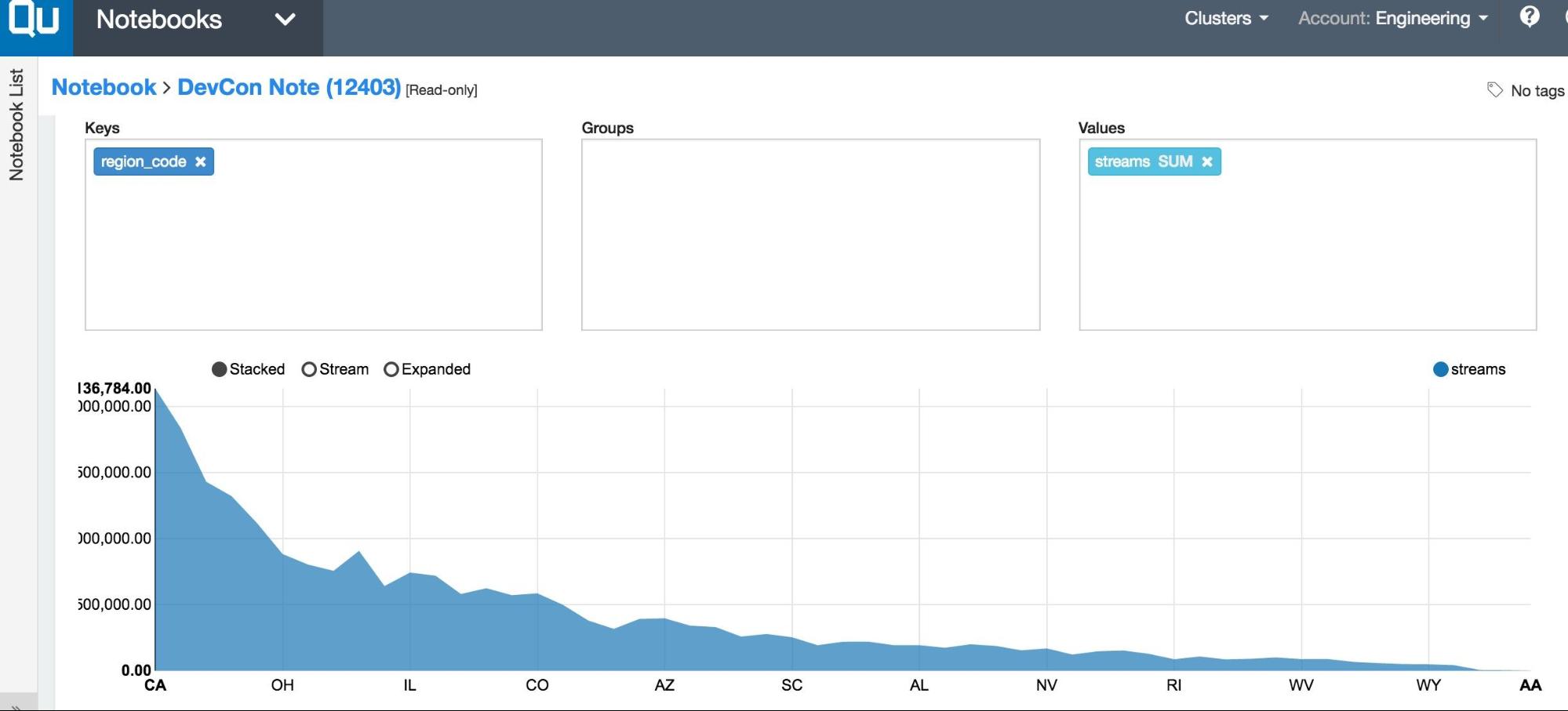

Qubole has also added other services for the cloud, e.g. Spark and Presto. By integrating Notebooks into the UI, end-users benefit from the interactive interface for data exploration. During TubeMogul DevCon in March, we had Qubole as a guest speaker to demonstrate the power of Notebooks and set up an easy-to-use example to show the speed and ease of using Spark and Presto to perform interactive queries and build custom notebooks.

While the new UI features are impressive, the next major stage for TubeMogul was continual Presto use for our reporting platform. The SQL syntax is slightly different from Hive, but the speed of Presto queries is remarkable. As a comparison test, we took a typical large star schema organized set of fact and dimensional tables and ran a large month-long query in both Hive (on EMR) and Presto (on Qubole). Although the hardware differed slightly, the large 40-node EMR cluster took a couple of hours to finish, whereas a much smaller 5-node Presto cluster was able to do this in under 10 minutes. Presto is able to get this boost by skipping the Hadoop job platform completely, using its own processes instead to stream data considerably faster. As well, by storing data in ORC format, Presto is able to perform better read optimizations from S3. This drastically cut down on the amount of data it pulls in compared to Hive’s brute force method. Presto isn’t meant for doing large ETL jobs, it lacks partition management and its tasks are not fault tolerant. If one fails, the query fails. But, for running ad-hoc queries or daily or monthly reports, the speed with which Presto can query data means we can run a much smaller cluster for an even smaller amount of time, saving even further on AWS costs.

TubeMogul recently pushed forward with migrating one of the last projects still using EMR, a reporting infrastructure that performed Hive queries to aggregate and sends out daily reports to partners, customers, and employees. By migrating this to Presto and Qubole, engineers have been able to remove a large swath of source code that isn’t required anymore. (If you’ve ever done that, you know how good that feels.) The project has been stripped down to the bare business requirements of the queries and the workflow in which to run them. The team at Qubole has been instrumental in assisting on these endeavors, from building UDFs in Presto, to cluster setup and testing, they have always been quick to respond to issues and support calls. Our reports run an order of magnitude faster on Presto and the cost savings are substantial. It has allowed us to start designing new features that weren’t possible before and streamline the business further.