As a best practice, we recommend users create a few large Presto clusters that are shared between different teams, instead of creating multiple small clusters (one per team). This helps reduce operational and maintenance overhead costs, as multiple smaller clusters must be always up with some minimum nodes, thereby consuming resources.

A requirement is to have isolation mechanisms between different teams such that:

- Queries from one team do not interfere with another team’s queries.

- Queries from a single team should not be able to upscale the cluster to its maximum size using Presto’s inbuilt autoscaling capabilities on Qubole.

While requirement (1) stems from the need to have performance and isolation guarantees, the requirement (2) above is driven primarily by cost considerations.

Presto Resource Groups

Presto has had support from resource groups to satisfy some of these requirements. Presto Resource Groups provide a template for grouping users based on their priority and the structure of the organization, in order to manage resource allocation among users. Under this system, each user belongs to a resource group and follows permissions defined for that group. Resource groups provide mechanisms to provide resource limits in terms of the number of concurrent queries, memory, and CPU limits that each group is entitled to. This helps in meeting some of the performance and isolation guarantees. However, resource groups lack any mechanism to provide cost isolation across different groups.

Dynamic sizing of Presto Clusters on Qubole

Presto on Qubole recently introduced a feature around dynamic sizing of clusters based on Resource Groups. This feature provides cost isolation between different teams and business units while sharing the same physical cluster. It also extends these Resource Groups to define autoscaling limits on a per-user basis. With this feature, customers can define the maximum number of nodes that a resource group can scale the cluster up to and regulate autoscaling.

Dynamic sizing of Presto clusters using Resource Groups helps in the following ways:

- Prevents a single user or a few users from scaling the cluster to its maximum size.

- Allows admins to consolidate multiple small clusters into a single cluster which then:

- Brings down costs by running at a minimum size smaller than the aggregated minimum size of multiple clusters

- Improves end-user experience in most cases as a bigger cluster, which would be a sum of individual small cluster sizes is now available

- Improves cluster efficiency by careful resizing of max size

- Improves admin experience by having fewer clusters to manage

- Reduces query failures due to “Local Memory Exceeded” errors

Using Resource Groups for Dynamic Sizing of Presto Clusters

Presto autoscaling has been modified to calculate the maximum size of a cluster dynamically using custom scaling limits defined by cluster administrators. This calculation is driven by the number of resource groups that either currently have queries in the execution pipeline or had queries within a predefined activity period. With a calculated max-size (which will be smaller than or equal to the max-size defined in a cluster configuration), the cluster can scale up only to the calculated max-size. Similarly, a cluster can now scale down when the cluster is beyond the calculated max size and is underutilized.

Presto Resource Groups already provide a hierarchical and templatized mechanism to define users and groups. We have extended them to have the upscaling limit for each user and group. With this feature, admins can group users according to their project, organizations, or business units, and define scaling limits for these groups and users.

Defining Custom Scaling Limits for Users/Groups:

A new Resource Group Property, maxNodeLimit, has been added to define the custom scaling limit. It denotes the maximum number of nodes this group can request from the cluster. It may be specified as an absolute number of nodes (e.g. 10) or as a percentage (e.g. 10%) of the cluster’s maximum size. The default value for this config is 20%.

In addition to the new config, this feature introduces the division of Resource Groups into two types :

- User-defined resource group: These are the standard Resource Groups that admins can define through the resource-groups.json file as per the documentation.

- Generated resource group: These are the system-generated resource groups. Any user who is not mapped to any user-defined resource groups gets assigned a newly generated resource group which has the maxNodeLimit of “20%”.

This also serves as the default per-user limit for this feature when User Resource Groups are not defined. In such cases, each user who runs queries will be assigned a Generated Resource Group which will limit that user’s upscaling capability to 20% of the cluster’s maximum size

Detailed Examples of Dynamic Sizing of Presto Clusters using Resource Groups

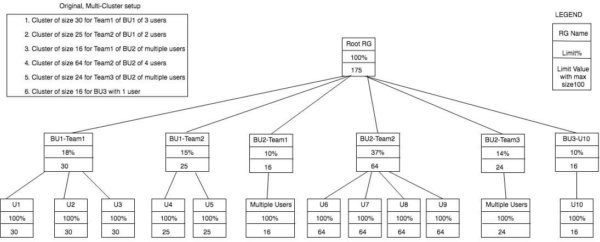

Let us now analyze the impact and usage of this feature with the example of an organization where admins have created the following clusters for different Business Units (BUs) in their account:

- The cluster of size 30 for Team1 of BU1 of 3 users

- The cluster of size 25 for Team2 of BU1 of 2 users

- The cluster of size 16 for Team1 of BU2 of multiple users

- The cluster of size 64 for Team2 of BU2 of 4 users

- The cluster of size 24 for Team3 of BU2 of multiple users

- The cluster of size 16 for BU3 with 1 user

This isolation structure of BUs, teams and users can be represented as Resource Groups with limits for scaling capability on BUs, teams, and even users. This exact structure of multiple clusters can be consolidated into one cluster with the maximum size the sum total of individual cluster sizes and represented with Resource Groups as given below. This is the recommended first step for admins while consolidating clusters.

Setup 1: Consolidating multiple clusters to one cluster:

With this setup:

- The maximum cluster size is the sum total of independent cluster sizes.

- Users of BU1-Team1 can scale the cluster to 30 nodes, which is the same as their capability in a separate cluster.

- Similar limits apply to all the teams.

- Hence, in such a consolidation, end-users do not see any degradation in performance and their scaling capability is not curtailed. On the other hand, they will see the benefits of larger consolidated clusters as mentioned initially.

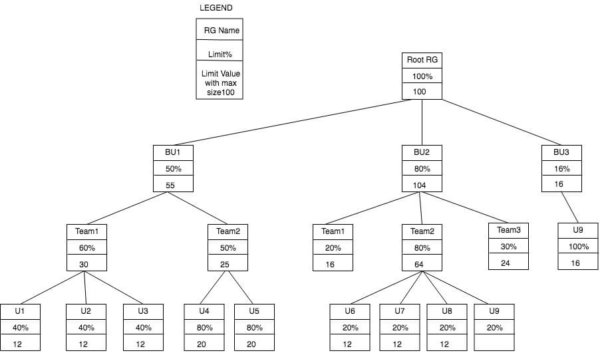

Now, let’s consider a different structure with finer control over the team and user limits. Here, we will look at the Resource Groups representation for the same organization where admins have decided to consolidate these clusters into a single smaller cluster of maxSize 100 and also applied per user scaling limits along with scaling limits at BU level as per BU budgets:

Setup 2: Consolidating multiple clusters to one cluster of smaller size:

In this setup, there are 2 major differences:

- We have added user limits along with team limits and hence,

- Individual users of BU1-Team1 can scale the cluster to 12 nodes, which controls cost when only 1 or 2 users are active.

- Users of BU1-Team1 when all active, can scale the cluster to 30 nodes, which is the same as their capability in a separate cluster.

- The maximum cluster size is less than the sum total of independent cluster sizes

- There would be a performance impact when all users are active at the same time since earlier the users had 175 total nodes, as compared to 100 nodes now.

- However, this brings down the costs and provides a similar experience as a multi-cluster setup if few teams are active at the same time.

We will now go into the details of the second setup and explore the different scenarios that can come up.

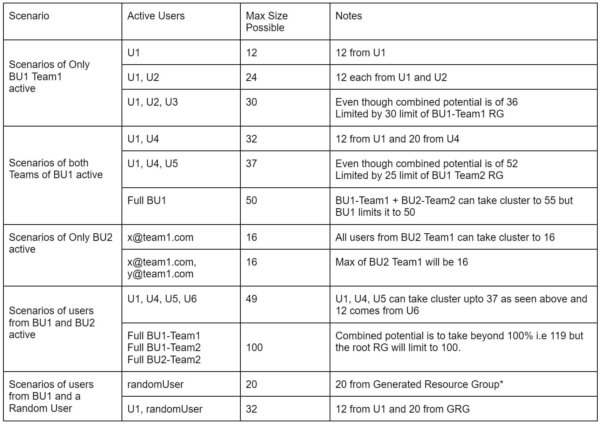

Detailed Analysis (Setup 2):

*For any user which doesn’t map to any of the resource groups, the default limit is 20% of max cluster size.

You can find the steps to configure the above setups in Qubole here:

https://docs.qubole.com/en/latest/admin-guide/engine-admin/presto-admin/resource-group-dynamic-cluster-size.html

https://docs.qubole.com/en/latest/admin-guide/engine-admin/presto-admin/resource-group-dynamic-examples.html

Resource Groups for Dynamic Sizing of Presto Clusters

Resource groups are an important construct in Presto to control resource usage and query scheduling. Extending them to add limits on scaling provides us with a simple solution to place limitations on autoscaling and augment resource groups with cost isolation mechanisms. After this feature, admins can not only add user-level restrictions on autoscaling but also combine multiple clusters into one for simpler management and setup.

Future Innovations in Dynamic Sizing of Presto Clusters using Resource Groups

Our current solution for dynamic cluster sizing using resource groups only solves the cost isolation problem. On the other, existing mechanisms for resource allocation in Presto Resource Groups primarily target admission control as a mechanism to allocate resources in the desired proportion over an extended period of time. Users may still face a lack of resource isolation when multiple clusters get consolidated into a few larger ones. For example, consider a scenario, wherein Project1 was running on a 20 node cluster and Project2 was running on a 5 node cluster. These two clusters are consolidated and a single cluster is formed with 25 nodes. Now, users of Project1 should get 20nodes worth of resources, and users of Project2 should only get 5 nodes worth of resources. This strict form of user quotas is not supported in Presto yet. We are working on a feature that will extend the Resource Group based scaling to introduce resource isolation for customers.