AUTOSCALING

- Home >

- Platform >

- Open Data Lake Platform >

- Platform Runtime Services >

- Workload-aware Autoscaling

Downscale, upscale, and rebalance clusters automatically in the cloud based on SLA, priority, and workload context of each job.

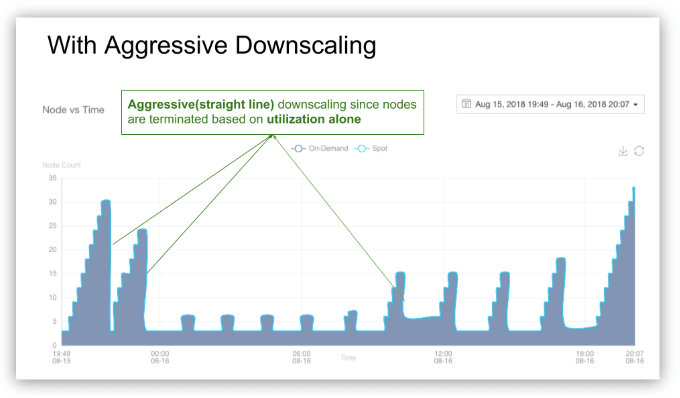

Downscaling

Prevent cost overruns by shutting down idle nodes upon job completion.

Use Aggressive Downscaling to rebalance workloads across active nodes and decommission idle ones without the risk of data loss. Enable faster recycling of clusters and nodes while simultaneously providing cost savings, stability, performance, and fault tolerance benefits.

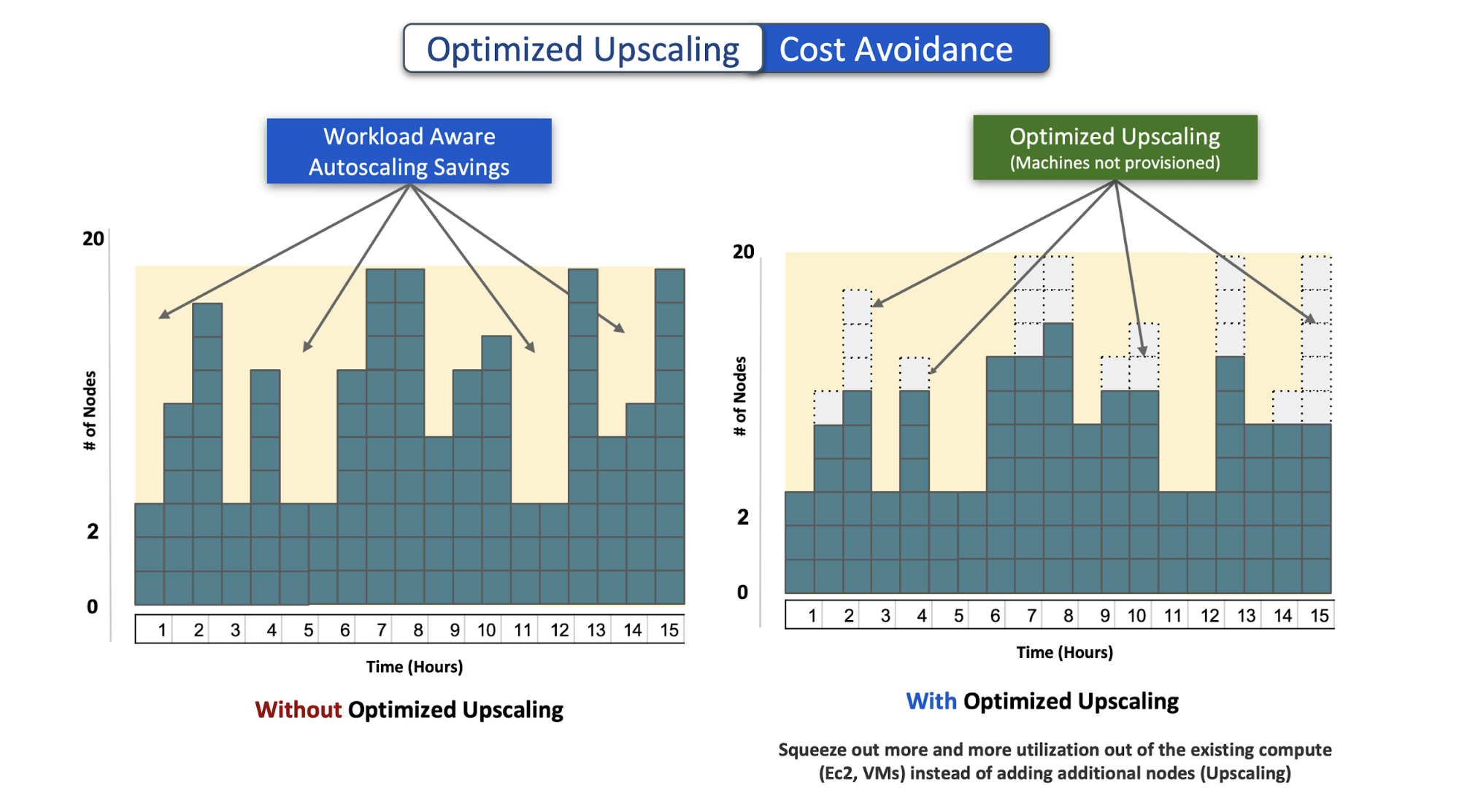

Upscaling

Get additional utilization of the existing compute nodes instead of adding additional nodes.

Optimized upscaling avoids wasted/underutilized resources by recapturing them and helps with greater cost avoidance.

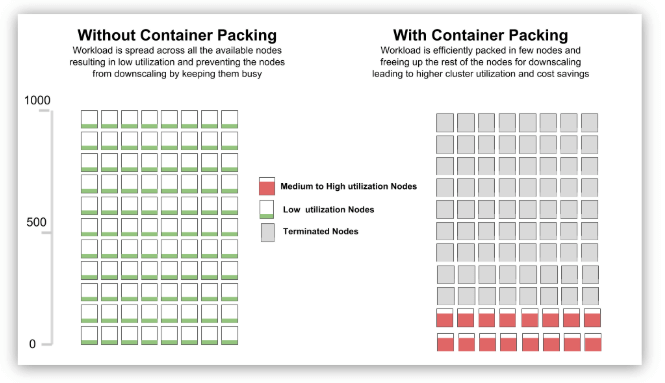

Data Allocation

Workload packing performs smart allocation of workloads, freeing up larger pools of nodes to downscale while preventing cluster hot spots and honoring data locality preferences.

This novel non-uniform resource allocation strategy further reduces the cost to run elastic clusters.