This is a guest post written by Shailesh Garg, Director of Engineering at RevX.

A programmatic ad-tech platform like RevX generates terabytes of data on a daily basis. To effectively process and leverage this data, we use big data tools like Hadoop for reporting and analytics. Our infrastructure is hosted in Amazon AWS across multiple locations globally.

This blog talks about our learnings of building a Hadoop cluster in AWS and a comparison of various options based on the Total Cost of Ownership (TCO).

Problem Definition

Build a Hadoop cluster that can be used to analyze hundreds of terabytes of data in the most efficient way possible. The workload will include a mix of hourly/daily scheduled jobs as well as ad-hoc queries for on-spot data analysis.

The two most important factors that govern setting up a Hadoop cluster are:

- Total HDFS cluster space required to store all data that needs to be analyzed

- Adequate number of mappers and reducers configured to handle varying cluster workload

Given this background, broadly, the following options can be considered for implementing a Hadoop cluster:

- Running static Hadoop cluster with EC2 instances in AWS

- Elastic Map Reduce (EMR), EC2 and S3

- Qubole, EC2, and S3

Option 1: Static Hadoop cluster with EC2 instances in AWS

In a static Hadoop cluster, data that needs to be crunched has to be stored in HDFS. For the sample problem statement mentioned in this blog, let’s assume that we need to build a Hadoop cluster that can store 100 TB of data in the cluster.

HDFS storage required = Data to be stored * Replication Factor / Recommended HDFS utilization %

Replication Factor: The Hadoop framework was built to take care of machine failures by replicating data over several machines. Generally, it is recommended that 3 copies of data should be stored in 3 different machines, so if one machine fails, other machines can provide the data.

HDFS utilization %: While processing data on the Hadoop framework, we run jobs that generate some transient and output data which need to be stored in HDFS as well. Hence, it is recommended that at any given time, HDFS space utilized should not be more than 60%.

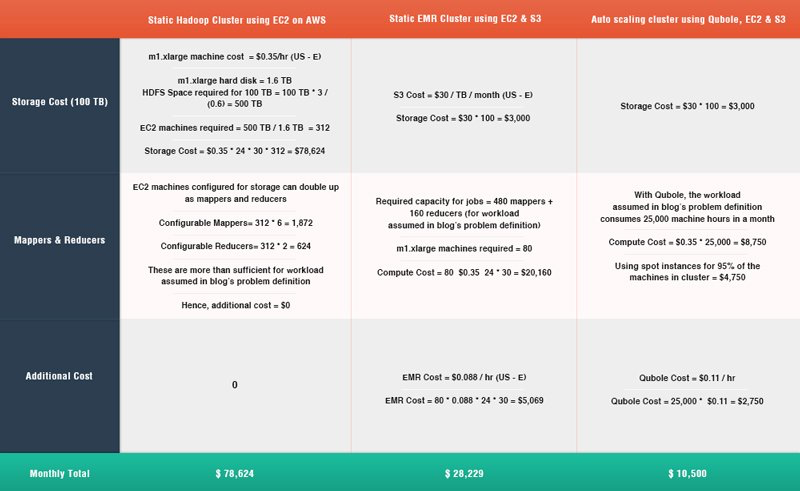

For someone having a 100TB data usage, the cost of a static Hadoop cluster will come out to be ~$78,000 (Pls. refer to table 1 for details)

Option 2: Static EMR cluster using EC2 and S3

S3 is the HDFS equivalent in EMR. Basically, in this case, you form a cluster using EC2 instances. Whenever a job is executed, data is copied from S3 to HDFS of the cluster. There is an abstraction layer involved and the user need not bother about S3 intricacies; EMR takes care of this internally. EMR clusters can be scaled up or down manually either by the click of a button or by calling AWS API. Using S3 as storage instead of HDFS has huge cost implications since S3 storage is very cheap – just $30/TB/month. Since S3 guarantees 99.99% availability, there is no need to replicate data as well. However in EMR, one has to pay processing costs separately.

Continuing with the sample example, the cost of an EMR cluster will be around ~$28,000 (Please refer to table 1 for details) which is ~65% less than the cost of a static Hadoop Cluster + EC2 machines combination (option 1). Pls. refer to table 1 for details

Option 3: Auto-scaling Qubole cluster using EC2 and S3

Qubole offers tools and services to manage Hadoop clusters over public clouds like Amazon, Microsoft, and Google. The main difference in using Qubole over EMR is that the Hadoop cluster can be scaled up or down automatically depending upon multiple factors like HDFS space, mappers, and reducers required by the current workload. If there is no job running, the cluster shuts down automatically. With auto-scaling, one can leverage spot instances too. Because of this, the total cost of Qubole managed auto-scaling cluster comes out to be the cheapest when compared with all other listed options

Continuing with the sample example, for someone using 100TB storage and using spot instances for 95% of machines, the total cost will come out to ~$10,500 which is ~62% less than the cost of EMR (option 2) and ~85% less than the cost of static Hadoop Cluster + EC2 machines combination (option 1). (Pls. refer to Table 1 for details)

Table 1- Cost Implications of Hosting Hadoop Cluster on different cloud technologies

Key takeaways for technology teams that are looking to implement their own Hadoop cluster over a public cloud-like AWS:

- S3 can be used as a cheaper data store instead of HDFS with technologies like EMR and Qubole. This alone has reduced our TCO by 65%.

- One of the big benefits of cloud technologies is auto-scaling which reduces cost significantly. By adopting Qubole, which has an in-built auto-scaling implementation, we were able to reduce costs by another ~60%.

- Auto-scaling systems also enable you to leverage spot instances which further helps in cost optimization. We are using ~95% of spot instances for our Qubole-managed Hadoop clusters without any stability or performance issues. EMR allows the usage of spot instances but spot instances don’t make sense for the static cluster which needs to be running 24*7.

- For auto-scaling clusters, the overhead of moving data from S3 to HDFS is offset by a reduction in wait time for mappers and reducers.

- Moving to Qubole also reduced our operational overhead costs of maintaining the Hadoop cluster to almost zero. This means a leaner engineering/ops team that can focus on solving real business problems. While doing your TCO calculations, make sure to include this aspect into consideration as well.

At RevX, we continue to innovate and adopt new technologies to solve challenging business and engineering problems. Optimizing our Hadoop infrastructure allows us more granular and efficient data analysis thereby gaining richer insights that eventually drive superior business performance.

This blog was originally posted here.