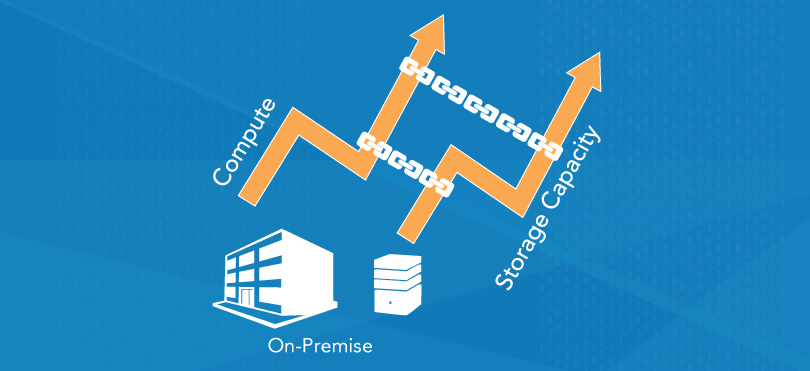

When Hadoop is deployed with on-premises architecture, compute and storage are combined together. As a result, compute and storage must be scaled together and the clusters must be persistently on otherwise the data becomes inaccessible. On the cloud, compute and storage can be separated with a service such as EC2 and S3 used as the object-store. This means they can be scaled separately depending on the data team’s needs.

Why does this distinction matter? Since compute and storage are tied together in an on-premises solution, elasticity is much harder to achieve and manage. On the other hand, a key advantage of cloud infrastructure is it gives the data team fine-grained control over speed vs cost.

How This Applies to Big Data

While a key advantage of big data technology is the ability to collect and store large volumes of structured, unstructured, and raw data in a data lake, most organizations only end up processing a small percentage of the data they gather. According to recent research from Forrester, an estimated 60-73% of data that businesses store ends up not being processed. Given this statistic, deployments that tie compute and storage together end up spending on compute capacity that is underutilized.

Also, big data workloads are often “bursty”, requiring data teams to provision their resources to peak capacity at all times, resulting once again in resource underutilization during longer-lasting off-peak usage hours. Many modern big data analytics technologies such as Apache Spark, are designed for in-memory processing, so they’re even more compute-intensive.

While mass data storage is becoming increasingly cheap, compute is expensive and ephemeral. By separating compute and storage, data teams can easily and economically scale storage capacity to match rapidly growing datasets while only scaling distributed computation as required by their big data processing needs.

Benefits for Data Teams

Scalability and Manageability

Separating storage from compute makes scaling infrastructure to match bursty and rapidly growing workloads as well as datasets more manageable. One can scale up or down strictly based on the workload and independent of the other. It also provides total flexibility in matching resources to actual storage and compute capacity required at any given time. The separation of the two also reduces the complexity of managing multiple environments. For instance, production, development, and ad-hoc analytics processes could run against the same dataset thereby eliminating the administrative overhead of data replication and potential synchronization.

Agility

Decoupling storage and compute gives data teams greater agility. By relying on the cloud as the object store, data teams can get started on projects immediately rather than spending months building out a deployment. Data teams also don’t have to know compute and storage capacity needed well in advance–freeing them from having to guess which, more often than not, results in either over or under provisioning the resources.

In addition, since the cloud vendors provide easy access to different machine instances spanning niche types such as storage-optimized, memory-optimized, and compute-optimized, teams aren’t locked into a particular set of machines and can quickly and easily switch to a different configuration depending on the use case–with minimal or no downtime. Flexible configurations enable data admins to determine to what degree to optimize for cost vs. performance. For example, if a particular workload needs to be processed quickly and cost is not a key factor, then the admin can configure more nodes to speed up processing. If controlling cost is critical, then the admin can configure less nodes and utilize other cost-saving features such as auto-scaling and Spot Instances (more below).

Lower TCO

Decoupling storage and compute can lead to lower total cost of ownership. Big data in the cloud providers offer a pay-per-use model, so organizations only pay for the storage space and compute capacity actually used independently compared to what’s provisioned upfront for peak usage for both in a traditional big data application deployment. In the AWS cloud, data teams can also take advantage of the Spot instance market. Spot Instances are spare EC2 computing instances offered at a discount compared to On-Demand pricing. So using Spot instances can significantly reduce the cost of running big data applications or increasing existing application’s compute capacity.

It is important to note that forming heterogeneous clusters (for example, a combination of volatile but less expensive Spot instances and stable but pricier On-Demand instances) that ultimately leads to lower TCO compared to an all On-Demand instances cluster is easier if storage is decoupled from compute. Otherwise, data loss, replication, and synchronization would have to be taken into consideration before clusters can be altered. Finally, data teams spend less on administration costs given that the majority of management requirements are eliminated or simplified.

This is part of a series exploring the benefits of cloud architecture. See the first post of the series here, an in-depth on Spot instances here, and come back for more on the economics of provisioning to the peak.