Introduction:

Users of Qubole Data Service use Hive queries or Hadoop jobs to process data that resides in Amazon’s Simple Storage Service (S3). S3 has many advantages including data security mechanisms and high reliability. However, S3 is much slower than HDFS and direct-attached storage. In this first of a series of posts, we dive into some of the improvements we have made to Hadoop and Hive to speed up communication with S3. These changes have resulted in dramatic performance improvements (8x in specific query processing steps detailed in this post). And most importantly – they have significantly improved the experience of our users.

Split Computation in Hadoop

A simple Hadoop job consists of a map and a reduced task. The map phase is parallelized by grouping sets of input files into splits. A split is a unit of parallelism. Multiple map tasks are instantiated and each of these is assigned a split. Hadoop needs to know the size of input files so that they can be grouped into equal-sized splits. Oftentimes, input files are spread across many directories. For example, two years of data, organized into hourly directories, results in 17520 directories. If each directory contains 6 files, this makes a grand total of 105,120 files. The sizes of all these files need to be determined for split computation. Hadoop uses a generic filesystem API – that was designed primarily with HDFS in mind. It includes a filesystem implementation on top of S3 which allows higher layers to be agnostic of the source of the data. Map-Reduce calls the generic file listing API against each input directory to get the size of all files in the directory. In our example, this results in 17520 API calls. This is not a big deal in HDFS but results in a very bad performance in S3. Every listing call in S3 involves using a Rest API call and parsing XML results which has very high overhead and latency. Furthermore, Amazon employs protection mechanisms against the high rate of API calls. For certain workloads, split computation becomes a huge bottleneck.

Faster Split computation for S3

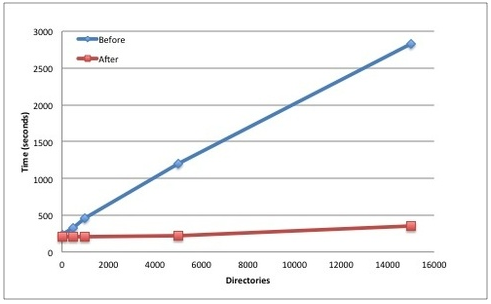

To solve this problem, we modified split computation to invoke listing at the level of the parent directory. This call returns all files (and their sizes) in all subdirectories in blocks of 1000. Some subdirectories and files may not be of interest to the job/query e.g. partition elimination may be eliminated some of them. We take advantage of the fact that file listing is in lexicographic order and perform a modified merge join of the list of files and list of directories of interest. This allows us to efficiently identify files sizes of interesting files. The modified algorithm results in only 106 API calls (each call returns 1000 files) compared to 17520 API calls in the original implementation. We compared the two approaches using a simple Hive test. In this test, we take a partitioned table T with 15,000 files but vary the number of partitions (a partition corresponds to a directory). We compare the performance of ‘select count(*) from T’. In the extreme case, this optimization shows a speedup of 8x!

Improving S3 read performance

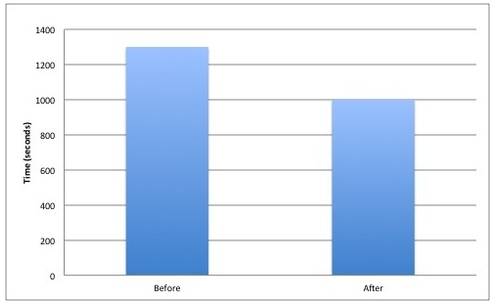

Another issue we noticed with S3 was that opening files took a significant amount of time – at least 50 milliseconds per file. This problem becomes pronounced when the input dataset has lots of small files and file open latency forms a significant portion of overall execution time. To alleviate this problem, we included an optimization wherein we open an S3 file in a background thread a little while before it is actually required by the map task. This hides the file’s open latency. One thing to be aware of is that if an S3 file is opened, but not read for a while, S3 returns a RequestTimeout and potentially penalizes the caller. We tested this optimization with a simple hive test. Our dataset consisted of 80000 files, each of size 640KB. We noticed an improvement of 30% in a count(*) query as a result of this optimization.

These are just a couple of ways we’re making the Hadoop stack play well in the cloud. The S3 I/O optimizations are part of the Qubole Data Service that is now generally available. Users can signup for a free account and get the benefits of this and many other optimizations we have made to build the fastest and most reliable Hadoop offering in the Cloud. If solving these sorts of problems interests you, please ping us at [email protected]. We’re hiring!