Motivation

One of the interesting things about using Hadoop and Hive in the cloud is that most data sits not on HDFS but on a cloud object store such as S3. The primary reason for this is cost. If data is stored in HDFS – then nodes must be kept running continuously (as local disk drives used to store HDFS data only retain data as long as the nodes are up). The cost of running such compute nodes is dramatically more expensive than storing data in S3 (and paying only for storage). Keeping data in S3 means that all data is remote from the compute nodes and while S3 performs well in general it is not uncommon to see significant variance in performance. A common practice to help with this is to move data into HDFS using distCp or a similar tool and then query it from Hive. This is a poor man’s implementation of caching. There is another closely related problem – data is not necessarily stored in formats that are optimal for querying. Hive and other parts of the Hadoop ecosystem allow enormous flexibility in dealing with data formats. Hive allows the use of SerDe’s (Serializers/Deserializers) to convert data into a relational form at query time. This technique of late binding is enormously powerful, but this flexibility comes at a cost. Queries frequently scan the same data repeatedly and re-execute parsing and deserialization code. This is not very efficient. Hive supports a number of more efficient formats for relational data (e.g. RCFile) and users often create temporary tables organized in these formats and rewrite their queries against them.

Caching as a Service

Caching in the cloud’s inefficient storage formats can provide considerable performance benefits. However, it is extremely difficult to do this manually for the following reasons:

- Different users might replicate the same data in different temporary tables leading to space wastage

- Rewriting queries to work against cached tables requires changes to application logic

- Invalidating caches when underlying data changes requires sophisticated logic

- The cloud offers some interesting tradeoffs which become important to decide when to cache what

In the spirit of the cloud, we decided to build caching as a service into the Qubole Data Platform! Our caching solution allows users to keep data in their own formats but offers the kind of performance they would expect from relationalizing this data into a high-performance format. Cache loading, invalidation, rewrites are transparent to the end-user. Cache maintenance has minimal overhead and is tiered automatically between ephemeral HDFS and S3, and can (if asked nicely) slice your bread for you. Jokes apart, we describe our design and some interesting characteristics of the cache in the following paragraphs.

Design

We explored a number of tradeoffs when designing the cache:

- Granularity: The granularity of a cache entry can be either at a file level or at the directory (Hive partition) level or somewhere in between (sets of files)

- Format: Data in the cache could be structured either in a row-major or a column-major format. Also, data can be packed into binary representations for primitive types for higher performance.

- Container: For columnar formats – data could be packed into a single container (like RCFile) or kept as separate files.

Our current design uses a columnar format at the file level with separate files for each column. For the kind of analytic queries we expect on our platform, column stores have enormous advantages over row stores. Our cache is integrated with Hive’s query execution and only reads those columns from the cache that are needed by the query. We picked a file-level cache since it simplified handling data load as well as invalidation. Our motivation for keeping a file per column was two-fold. For one, a file per column made it cheaper to populate the cache. A second reason was that we found seeks (and opens) on S3 to be very expensive. If data is stored in RCFile – then seeking operations inside such a container would have significantly impacted cache read performance.

Implementation

Cache Population

Our first implementation populated the cache by writing synchronously to HDFS (while a Hive query is running). There were two problems with this approach:

- HDFS itself is ephemeral – it is bought up and down on demand. This meant that the cache had to be repopulated each time the cluster was brought up.

- HDFS write performance caused queries to slow down if they were populating the cache as well.

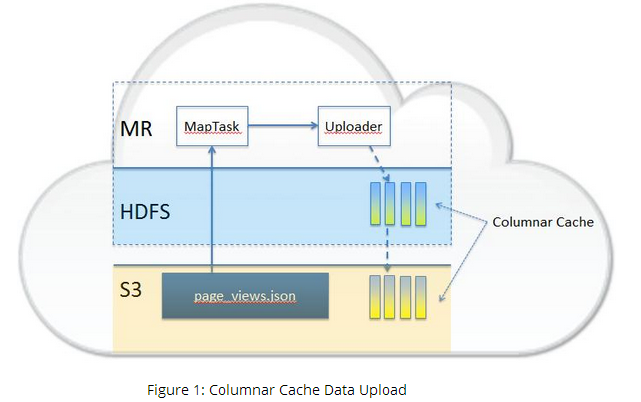

To allow persistence across clusters we realized we would want the data to be cached in S3 as well. To alleviate the performance issues – writing data out asynchronously seemed like the right approach. So we wrote a caching file system that buffers write on the local disk and asynchronously uploads files to both HDFS and S3. Surprisingly, our first cut at the caching file system was slower than writing to HDFS. There were two interesting lessons learned here:

- We were using the LocalFileSystem interface that Hadoop provides for writing to local disks and this has very high overhead.

- Only one of the available local disks was being used to buffer the cache writes (while HDFS was able to stripe across all available disks).

Our final implementation uses the Java File class and stripes buffered data across all available local disks. With this cache, population overheads have become minimal. A general schematic of the columnar cache and how it is populated is depicted in Figure 1.

Another issue we quickly hit was small file performance. The file-per-column approach ends up with a large number of small files if the source data itself consists of small files. To mitigate this, the cache automatically skips small files. We have also noticed that the fixed costs associated with processing very small files meant that columnar caching tends to not help on data sets with a large number of small files. We will talk about some of our techniques for handling small files better in a future blog post.

Cached Reads

Since our HDFS clusters are ephemeral, the S3 storage serves as a persistent store and the cache code will check both places for the presence of a cache file. When data is available on HDFS, it is preferred since we found HDFS performed better and more importantly showed more predictable performance. Also, we can store more data in HDFS than in S3 allowing for a bigger session cache and a smaller permanent one. A background process ensures that the permanent S3 cache is kept under the target size by deleting older cache files.

HDFS as a Cache

The initial versions of our Auto-scaling Hadoop clusters only used the core (initial) nodes of the Hadoop cluster to store HDFS data. We quickly hit a wall with this arrangement – as the performance of the cache became bottlenecked on the few data nodes available in the cluster. We realized we had to make all nodes work as Datanodes – but that lead to a vexing question – how would we scale down the cluster quickly? If we remove nodes wily-nilly while scaling down – then lots of (cache) files would go ‘missing’ in the file system and cause all kinds of IO exceptions. Waiting, instead, for cached data to be re-replicated before removing nodes made little sense. It would take a long time and the data was anyway backed up to S3. To solve these issues we made a few enhancements to HDFS to deal with cache files more efficiently. Cached files only have a replication factor of 1. When nodes are decommissioned, we simply delete affected cache files. This allows us to quickly resize our clusters. Additionally, the NameNode does not auto-commit file events under cache directories and instead delays these commits until the log is full (or another event that requires an immediate commit shows up). This minimizes WAL overhead from cache file events. Finally, we use HDFS quotas to ensure that cached files do not impact NameNode and DataNode memory usage.

Conclusion and Future Work

In our tests, the columnar cache improves simple query performance by 5x with commonly used (and well-written) SerDe’s. In fact, performance improves so much that other pieces of the Hadoop stack are beginning to dominate query processing times. Cache population overhead can be as low as 20% but is heavily dependent on the number of columns and the size of the underlying files. The cache is part of our overall data platform and is now generally available for signup. We hope that Hadoop and Hive users dealing with performance problems on the Cloud can get substantial benefits by running on jobs and queries on our platform. In terms of future work – one of the areas of interest is improved handling small files in the cache. Columnar storage also offers interesting possibilities in compression and query execution. If these problems interest you, Qubole is hiring – contact us at [email protected].